Augmented

Reality in Medicine

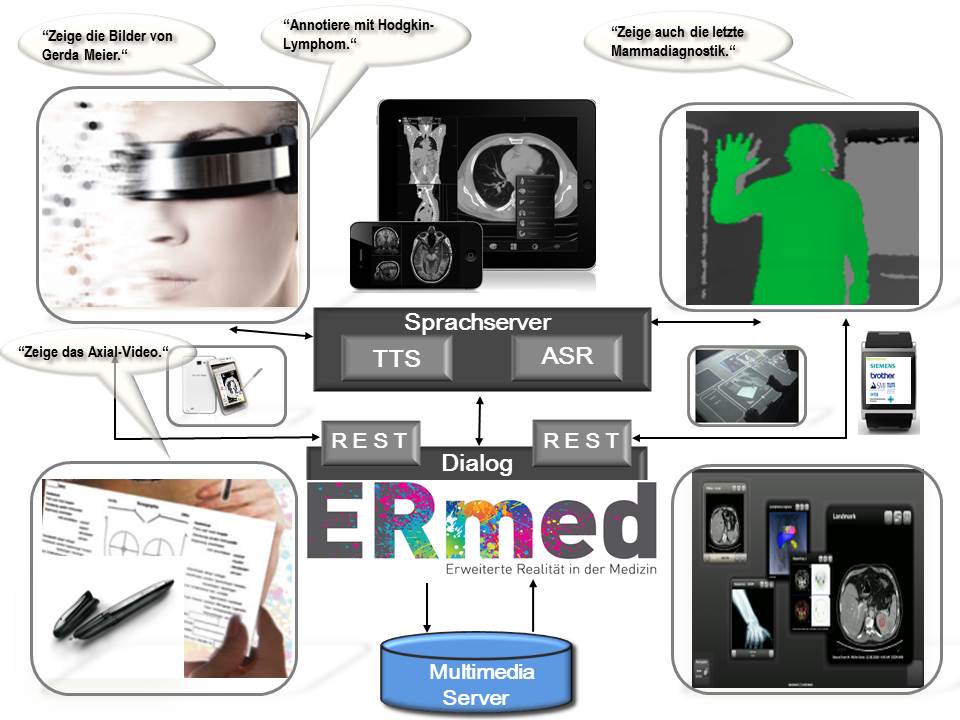

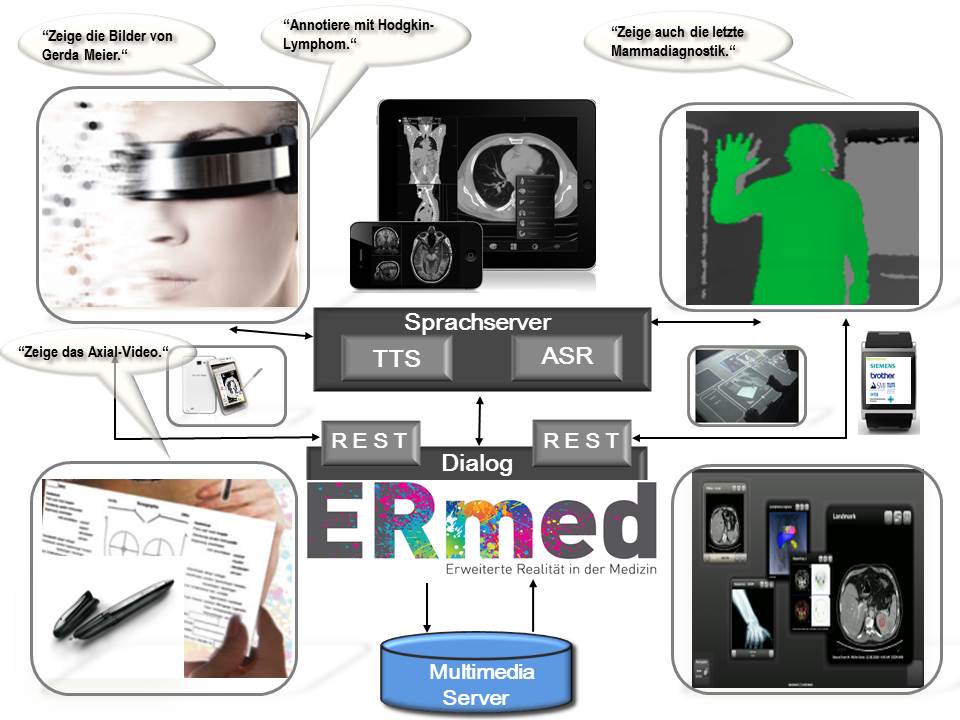

In ERmed, we enter the augmented and mixed reality realm, thereby

combining multiple input and output modalities.

Mixed reality refers to the merging of real and virtual worlds to

produce new environments and visualisations where physical and digital

objects co-exist and interact in real time.

Situation awareness

meets mutual knowledge for augmented cognition.

Testvideo

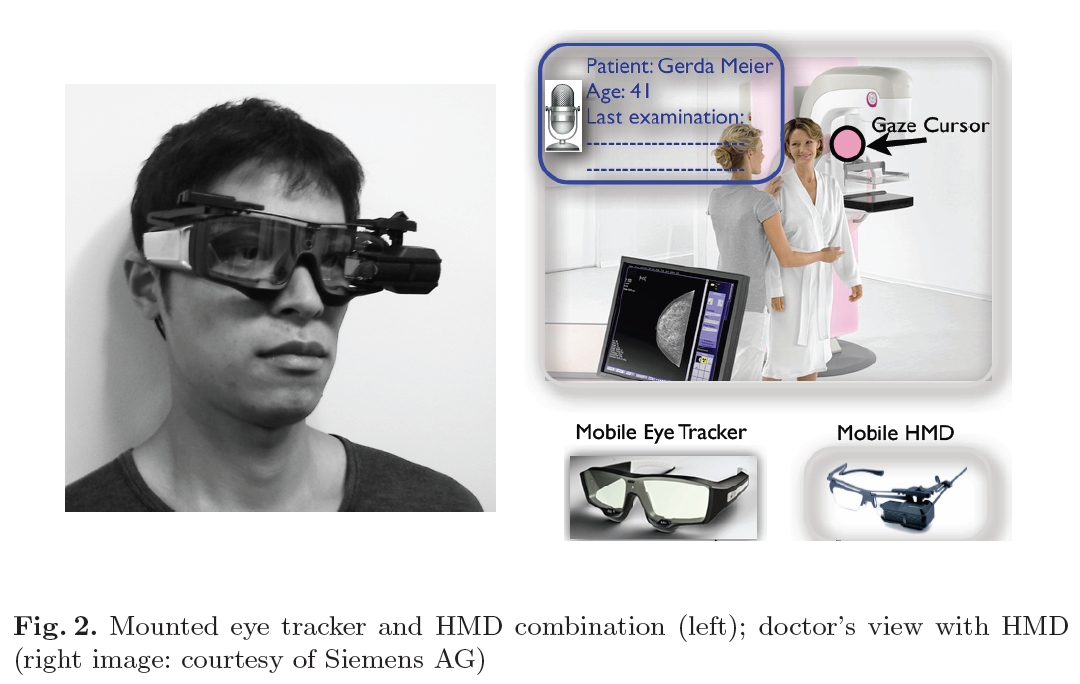

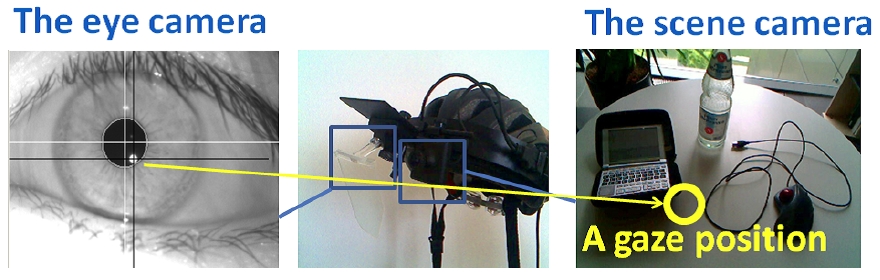

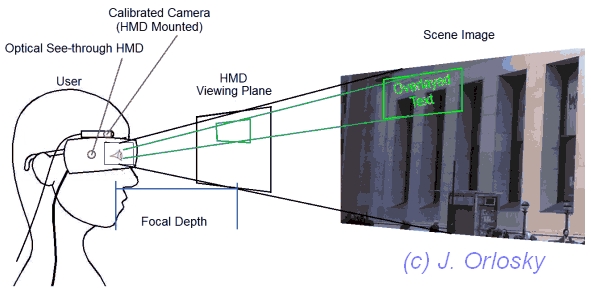

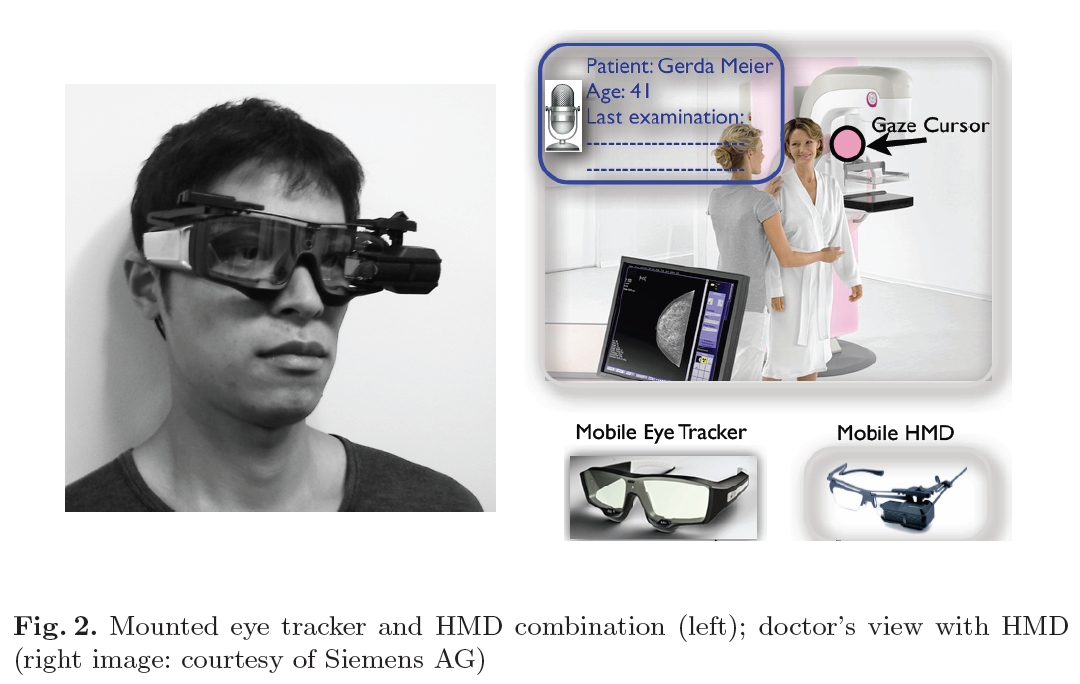

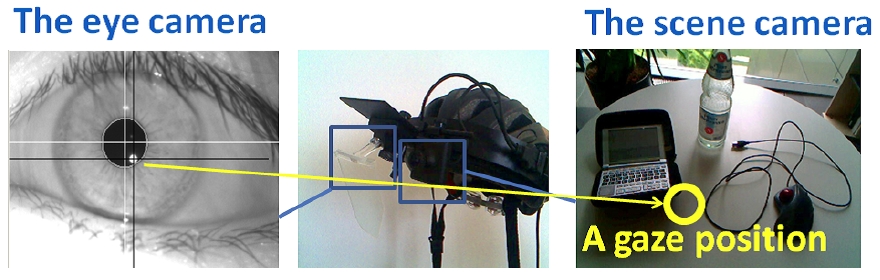

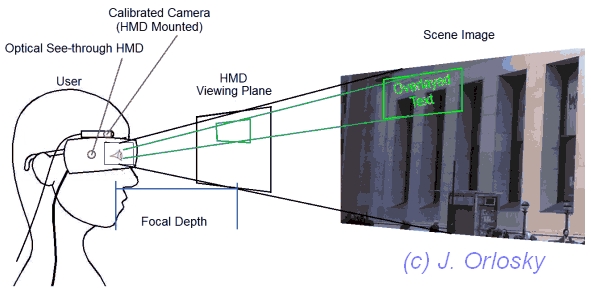

- World Innovation 1: We sychronise mobile eye trackers with optical see-through

head-mounted displays

and register the environment.

- World Innovation 2: We detect and track objects in a terminator view in real-time for augmented cognition.

- World Innovation 3: We use a binocular eye tracker to register the augmented virtuality

with the sight

of the user. See our first domain-independent application.

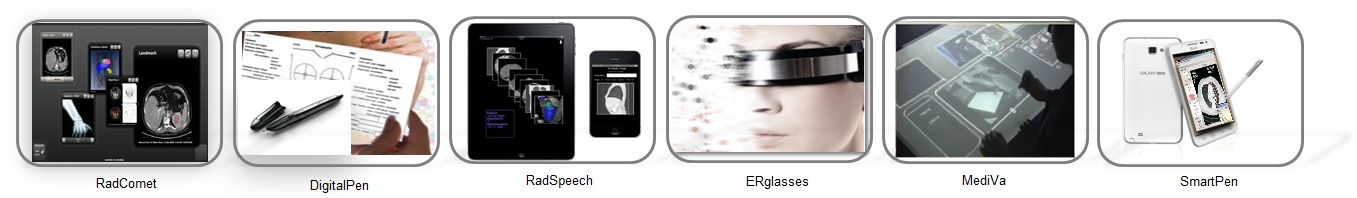

ERmed is a DFKI project based on RadSpeech.

Principal investigator: Dr. Daniel Sonntag (DFKI)

Associated researchers and developers: Markus Weber (DFKI), Takumi

Toyama (DFKI),

Mikhail Blinov (Russian Academy of Arts), Christian Schulz (DFKI),

Alexander Prange (DFKI),

Kirill Afanasev (DFKI), Tigran Mkrtchyan (DFKI), Nikolai Zhukov (DFKI), Jason Orlosky (Osaka

University)

Medical consultants: Prof. Dr. Alexander Cavallaro (ISI Erlangen), Dr.

Matthias Hammon (ISI Erlangen)

In order to provide a new foundation for managing dynamic content and

improve the usability of

optical see-through HMD and mobile eye tracker systems, we implement a salience-based activity recognition system

and combine it

with an intelligent multimedia text

and image multimodal (movement)

management system.

News:

- April 2014: KOGNIT kick-off !! Fundamental research grant, funded by:

- March 2014: Demo at IUI 2014

- January 2014: First evaluation on the

ability to accurately focus on virtual icons in each of several focus planes despite

having only monocular depth ques, confirming the viability of these

methods for interaction.

- December

2013: Second evalution at ELTE in Budapest focussing on

self-calibrating eye-tracking, robust gaze-guided object recognition

and how "artifical salience" can modulate the gaze

(un)consciously.

- October

2013: In Budapest, Takumi and Jason work on a combination of eye gaze

and

dynamic text management that allows

user centric text to move along a user's path in realtime.

- July 2013:

We start the "ELTE Building Project" (NIPG)

featuring eye gaze-based location awareness / indoor

navigation

- April 2013:

We combine SMI's eye tracker with a new glass frame to Brother's

Airscouter

- March 2013:

Jason Orlosky (Osaka University wins IUI best paper award on dynamic

text management)

- February

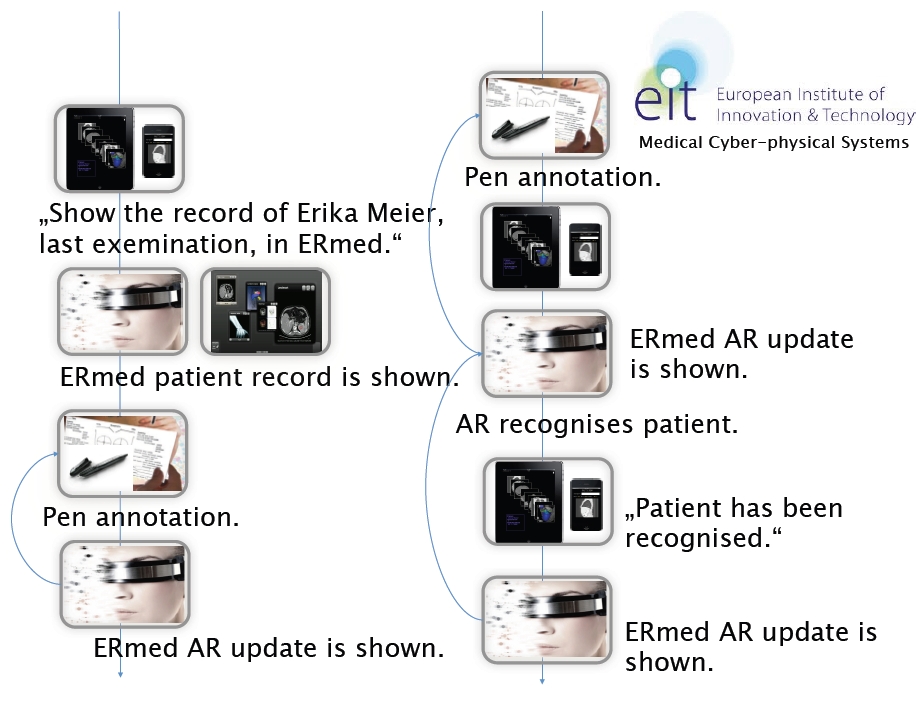

2013: EIT Medical

CPS Kick-off. ERmed delivers sitation awareness cues for

patient monitoring.

- December

2012: Interview

with Daniel Sonntag in

(Industry 4.0) in German

(Industry 4.0) in German

Medical Application Example:

Architecture

(Industry 4.0) in German

(Industry 4.0) in German